Research into modern Deep faking utilities while on the path to troll friends

First off, Why write this blog post?

well, I was honestly shocked at the effectiveness and how few checks are done with the application. I wanted to bring both awareness to the application, how it functions not just for hey look here is an app but also to show the dangers of applications like this. How applications like this can be used to CyberBully people, as a way to alert parents or guardians to the existence of this and what it can do.

There is something that I’ve always known iv always been told, everything is a tool until it’s used as a weapon. This is application is a tool intended for humour, but it is something that can easily be used as a weapon and I was shocked at the lack of checks allowing children to download this tool as it is listed on google play currently with an age rating of 12 and also the lack of checks within the app for content. With over 10 million downloads and 300k reviews, I think it’s about time the app took safety a little more serious, at up the age rating.

So what brought this around?

So while hanging out with the guys in Discord, as usual, We started joking around a certain Tweet that was put out saying they didn’t need Twitter security people. The guys were trying to access the app via faked photos to do vulnerability testing on the platform. Then the discussion of FaceApp and DeepFakes came up, converting an image to look like a female so we could access the application.

what are DeepFakes, well to put it simply,

“DeepFakes are synthetic media in which a person in an existing image or video is replaced with someone else’s likeness. Wikipedia”

Basically, it is an application that uses a very well trained Neural Network to be able to identity keep features of the human body and then overlays another provided image of making the original video or image appear with the selected image. Often these tools are written in python using multiple libraries and tend to be locked away secrets but in recent times these have become extremely common and acceptable in everyday use from Adults to Kids who are faking images of themselves onto posters or into videos. Most of the code for these applications can be found with a simple Google Searching, meaning anyone with a little knowledge can put together the application and run it

The conversation then took a turn as we started joking about using the app to make each other look terrible until a gif was shared of Fen after being DeepFaked with an application called Reface

So what is Reface, it is an application available on both Apple Store and Google Play store for free, The application is owned by a company called NEOCORTEXT, INC. located in Delaware USA, Although the application is rumoured to be operated from Russia, These companies application is the only application under this companies name that seems to be hosted on the google app store, I was unable to verify the same for the apple store. The application functions by using users to provide photos that are uploaded to Reface servers via their API they call it RefaceAI.

So as can be imagined we hopped onto this very quickly, an app that lets us screw with Gifs with faces of our friends, it went crazy the trolling of deepfaking CyberSecStu for hours and finally in the early moving onto the best target SecFenrir. This turned out to be some of the funniest content we could have produced, at the expense of our friends.

Below is an example of this

Both Hilarious and disturbing I know, but we do what we do for the joys and destruction of pure boredom, and dont worry we didn’t stop there we just kept going making all the memes we could. Spending hours laughing at this, bellow are stills from of the other DeepFakes we did of Fen from left to right

Beyonce -> Fen | Christina Agulera -> Fen | Adam Sandler (little Niki) -> Fen

If you know Fen, there is a very high chance you are crying laughing right now as we were. But it soon turned to something concerning, porn I think it was regarding common deepfakes that are seen around on popular Porn sites and places like Reddit.

So Deepfakes of celebrities on porn is nothing new, there are Reddit boards dedicated to it, entire sections of porn websites dedicated in fact a simple Google Search shows up over 16,000,000 instances of websites related to DeepFakes including entire websites with only deep faked content on it. These websites usually primarily have celebrity-based content but it may get to a point where it might not be. The DeepFakes utility has always been something of a complicated thing to set up before, but in recent times there has been a lot of tools developed to make this easier and it is starting to get scary how accurate these applications are becoming.

These apps have been surrounded by controversy from the start, apps such as DeepNudes which takes a female image and uses neural networks to make the image nude, to FaceApp hosting their servers in Russia, to I’m guessing now after I post this post.

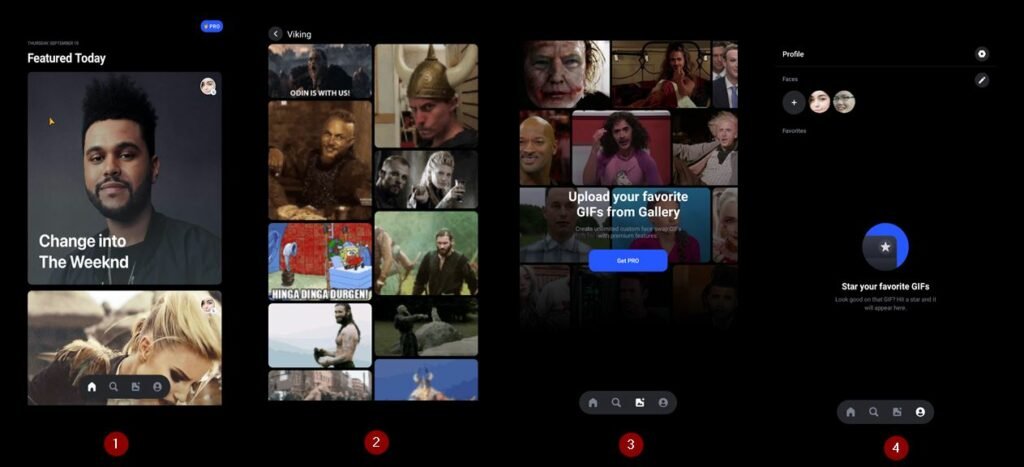

While looking at the Reface application we wondered what functions does this application have when it comes to interaction and modification of imagery, so let’s break down what you see below in the image.

- Allows you to select the top featured videos or gif (this is a mix of music videos and movies pretty normal gifs)

- Allows you to search and use gifs from Tenor, the same gifs that are found on gif keyboards on Facebook or discord

- Allows you to add your own Gifs from your Gallary (this requires purchasing premium features on the app)

- Allows you to add more faces to the program and also save your favourite gives

So it was realized from here we could just grab anyone’s photo from platforms like Facebook, Instagram, or video content and upload it into the app to be used, these could be taken selfies or any photo we wanted as long as a face was present. it’s not just that we could provide a face if you purchase the app you can also provide a gif that can have a face added to it. So with this we also though, Could we convert a porn video to a gif, and upload it into the app and fake a face over it?

The main question for this being

“Do They Have filters for detecting Nudity in photos to block Deep Faking Porn?”

Nope… No there was none

The application took the Mediums’ish’-Quality image of Fens profile profile that we uploaded and stitched it onto a Porn Gif that was downloaded from google for the testing (To also add the is gif is from a professional Porn Actress who will ne be named).

The scary and disturbing side as you can see for a Male face onto a Female body it definitely matched and seemed to work well, there didn’t seem to be much indication that this was a Deep Fake, other then Fens glasses and terrible mustache. We can all assume that fen will be getting some calls over the next few weeks.

There was on other interesting thing found, all the images and gives provided were mirrored by the software, why this is done is unclear.

So we know it stitches a guys face on fine, but we can’t leave it there we need to test more, how well would a face photo work on it.

So I didn’t feel comfortable about asking a female friend to do this and I also think I got enough of a laugh out of fen over this post, So I decided to take a selfie and using FaceApp another similar app that works on photos not on gif, was used to convert that selfie to a female.

Then import and overlay that Now Fe-male version of me into the application and DeepFake that onto the given porn gif… but before I show you that I need to take a break to say “How You Doin” to myself haha, Now back to the research, the photo in the middle is the original downloaded Gif and the one on the right is the refaced image

The Possibilities of this technology in the future is a scary concept, every time I see another application appear they seem to get better and better. This post is only an example of one of these applications that are currently going rampant around multiple communities, without checks, and with schools starting back up now.

I have a horrible feeling that applications easily available to the public like this will lead to making bullying of children in schools easier for the bullies or worse. I feel it is important for both educators and parents to understand and know of this application and the abilities it before this application is used as a weapon to harm those we care about.

Using this technology to cyberbully people is unfortunately not just a thought or fiction, it has already been employed by many groups to perform smear or attack people even in cases of celebrities being attack a very public version of this would be a group using JayZ to fake him singing a song with deepfakes this is only one of the multiple instances including celebrities been faked into porn like demonstrated above, or to even a worse scale Deepfakes being used in politics, for example, the video posted by BuzzFeed on deep faking Obama, brought the idea of DeepFakes being weaponized to the forefront of everyone’s minds as it could be used offensively by groups to produce videos like the Obama one.

The defenses and detection of deep faked material like this, is increasingly getting harder and harder to be able to detect with many AI’s, as testing adding the photos of Fen above and having them pass AI detection and being marked as “no manipulation detected”. This is possible because it is no longer a doctored file, but instead 2 separate files combined and used to generate a completely new file separated from the original.

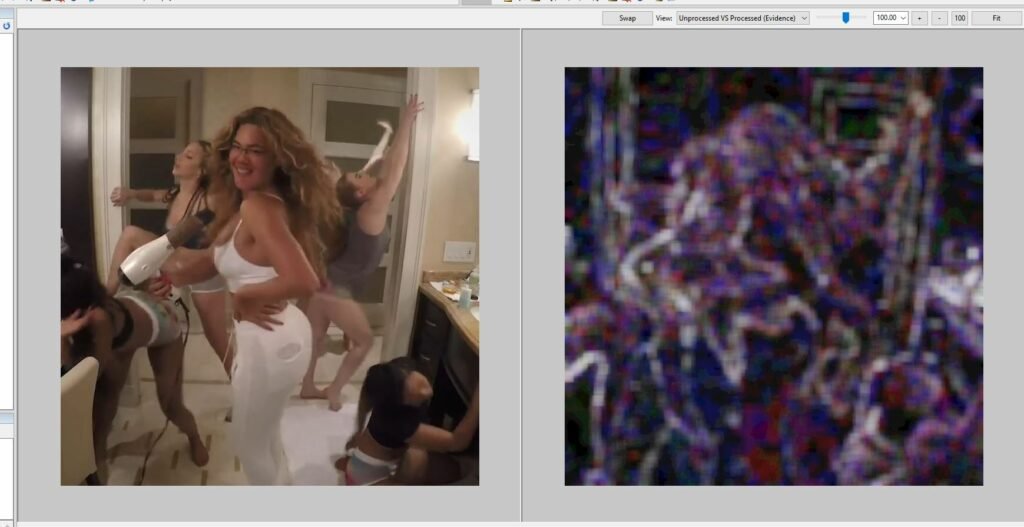

Before with many investigations, there were utilities that could be used for example ELA (Error Level Analysis) that does an analysis of compression artifacts in images to detect changes or modification of images such as layered imagery. There are many online tools and professional tools for checking these. For example, the one down below a professional-level tool used by many law enforcement and intelligence agencies was unable to detect the changes at with simple checks of the ELA as well as a few other attempts of changing, Again I feel this is because the file is a completely new file as I can confirm the tool works 100% perfect with other application detection such as FaceApp

To finish up this blog post, I will finish up with a gif that explains the app and the future of this technology in simple terms